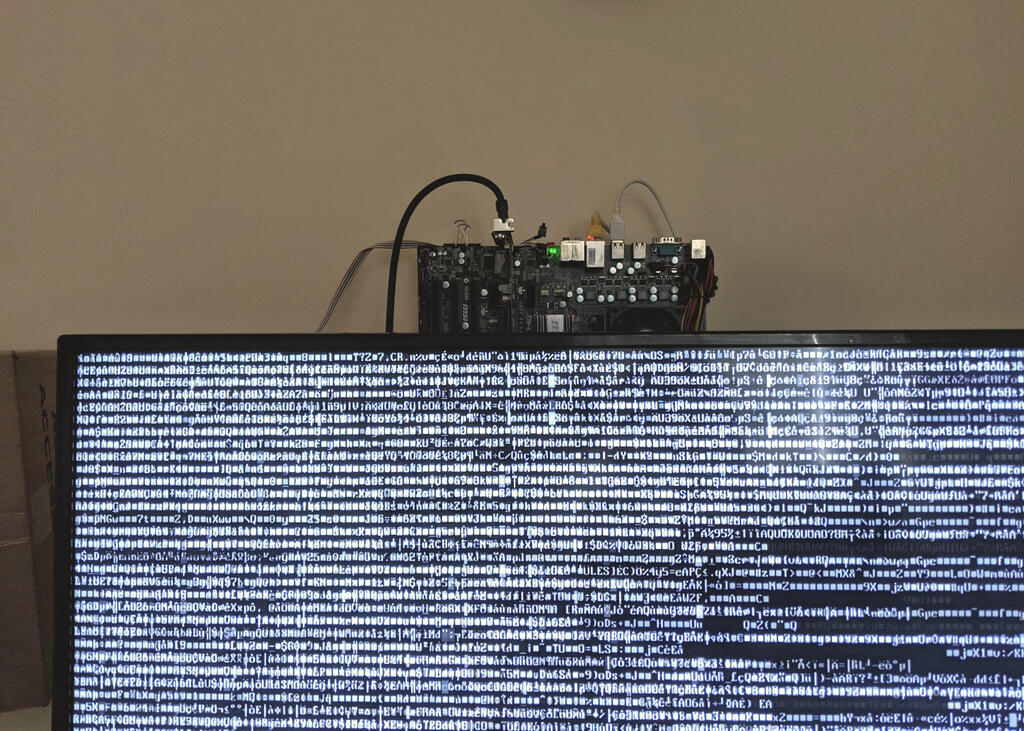

The whirring fan noise from my headless linux server in my living room has been annoying. Most of the noise, in my case, comes from the graphics card cooling fan. I was curious if the GPU would overheat if I run the machine with the GPU fan unplugged. Below is a little expriment I did.

Gathering Data

Reading GPU temperature

The lm_sensors linux package provides sensor reading functionality, including GPU tempeartures; a quick call of the sensors comamnd shows my AMD Radeon HD 4350 running at +39.5°C:

$ sensors

...

radeon-pci-0100

Adapter: PCI adapter

temp1: +39.5°C (crit = +120.0°C, hyst = +90.0°C)

Capturing Data

To continue with the expriment, I quickly hacked a bash one-liner that reads the GPU temperature and appends it to a text file every time it's invoked. It also includes an epoch timestamp.

$ cat record_gpu_temp.sh

sensors | grep -A 2 "radeon" | rg 'temp1:.*?\+(.*?)°C' -or '$1' | tr '\n' ' ' >> temps.txt && echo $EPOCHSECONDS >> temps.txt

I then used watch to have the one-liner invoked automatically every one seconds:

$ watch -n 1 sh record_gpu_temp.sh

The text file would look like this after a few dozen runs:

50.5 1633325134

50.5 1633325135

50.5 1633325136

50.5 1633325137

50.5 1633325138

50.0 1633325139

51.0 1633325140

...

The Experiment

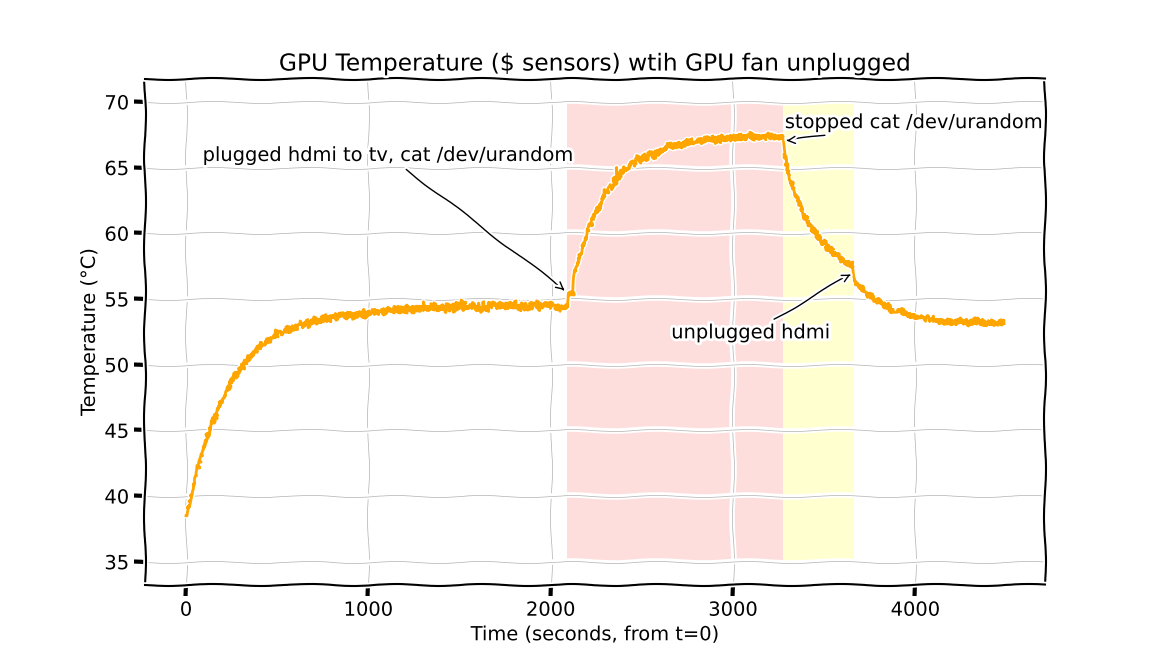

Running the machine under a few different scenarios while tempratures are captured, plus a quick matploblib plot of the data yielded the following:

Test 0: Baseline

The first test is for when the graphics card is simply inserted on the motherboard, but no external display is connected; the GPU is basically a "dummy". In my test, the GPU reached a equilibrium temperature of 54.5°C.

Test 1: HDMI plugged in, heavy console usage

The next test is for when the graphics card is powering an external display and is actively rendering. Running a cat /dev/urandom can force the GPU to be constantly rendering characters to the video console. In my test, the temperature rose to the highest of the whole expriment at 67°C.

Test 2: HDMI plugged in, low workload

Next test is when the GPU is powering an external display but not doing externsive rendering on screen. As soon as the cat /dev/urandom was terminated, the temperature drastically dropped to around 57°C.

Test 4: Unplugging HDMI

Once the HDMI cable was unplugged, the GPU temperature quickly dropped back down to its baseline temperature.

Conclusion

With my specific graphics card and setup, it seems I could get away with running the server without the GPU fan on. Though, it would still be nice to add some process to monitor the GPU temperature and alert if it exceeds critical temperature.

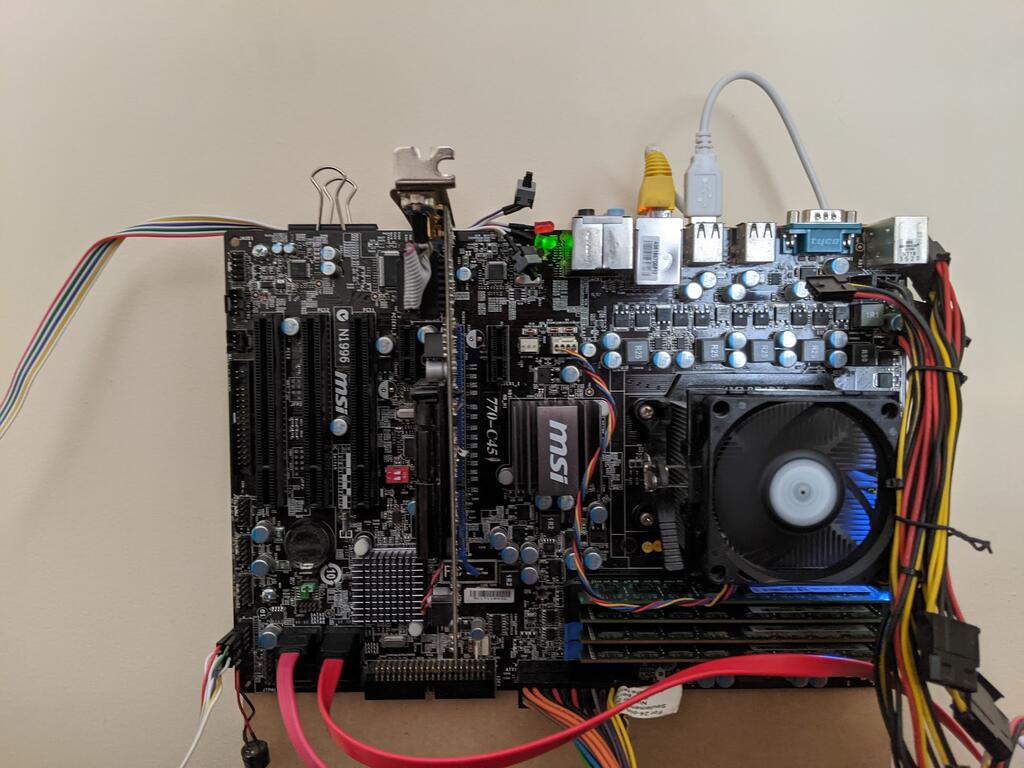

Why not just unplug the graphics card?

Ideally, the best solution would be to run the server without a graphics card at all. Sadly, though, the old motherboard I have happens to be one of those that requires a working video device during POST, and would refuse to boot in the absence of one (1 long, 2 short BIOS error beep code).